Reframing a system dependency into a scalable targeting strategy

View case studyA leadership case study on designing flexibility, reliability, and long-term resilience into a research platform.

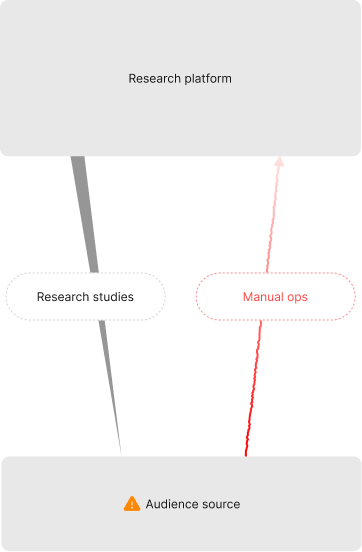

When a closed ecosystem starts showing cracks

For years, the platform operated on a simple, tightly integrated model:

One workflow

One audience source

Predictable, fast outcomes

But as audience availability fluctuated, the impact rippled across the ecosystem:

Study timelines became inconsistent

Ops interventions increased

Quality controls slowed completion

Architecture could not easily support alternative sources

The symptoms were scattered, but the system dependency was not.

My Role: Bring strategic clarity to an urgent, system-level problem

As Director of Product Experience, my responsibility wasn’t tactical delivery.

It was to uncover the real problem and create a north star the entire org could rally behind.

I shaped the work around four leadership pillars:

Reframe the Problem – Expose the underlying system dependency, not the surface-level symptoms.

Establish the UX Direction – Define what a modular, audience-agnostic targeting model should look and feel like.

Anchor Everything to Measurable Outcomes – Speed. Reliability. Scalability. Flexibility.

Provide Strategic Clarity Across Functions – Equip Product, engineering, ops, and GTM with mental models they could execute consistently.

This alignment work ensured the initiative stayed cohesive, not fragmented.

Strategic insight & research

Once I reframed the issue at a systems level, I directed the team to validate and deepen our understanding of the workflow. Under that direction, my IC designer led a focused research sprint and lightweight stakeholder workshops. The work revealed that nearly every pain point traced back to the same root cause — major parts of the workflow relied completely on one audience source.

This surfaced the breakthrough insight, directly aligned with my strategic framing:

qualification needed to happen inside the survey, not before it.

What the research uncovered (under my direction):

Users were frustrated with rigid screening requirements

Internal priorities around quality and speed conflicted

Key workflow steps depended on a single source

Workshops confirmed shared constraints and alignment gaps

What this insight enabled:

A simpler, self-contained qualification experience

Reduced dependency on any single source

A workflow ready for multi-source flexibility and growth

How the team delivered

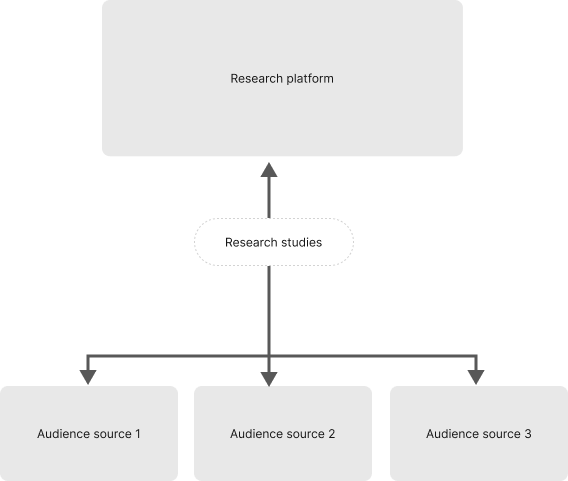

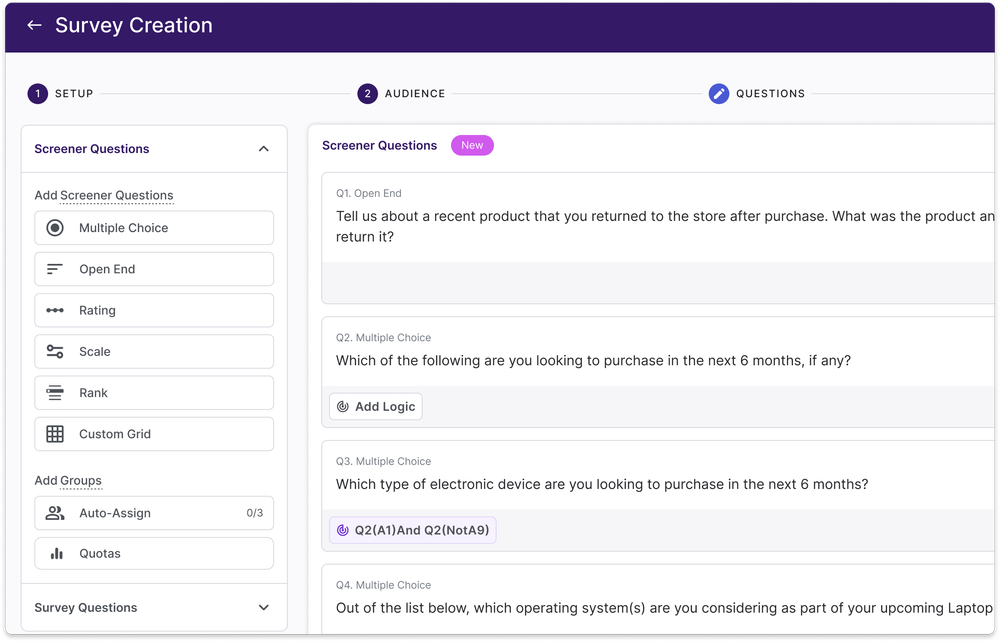

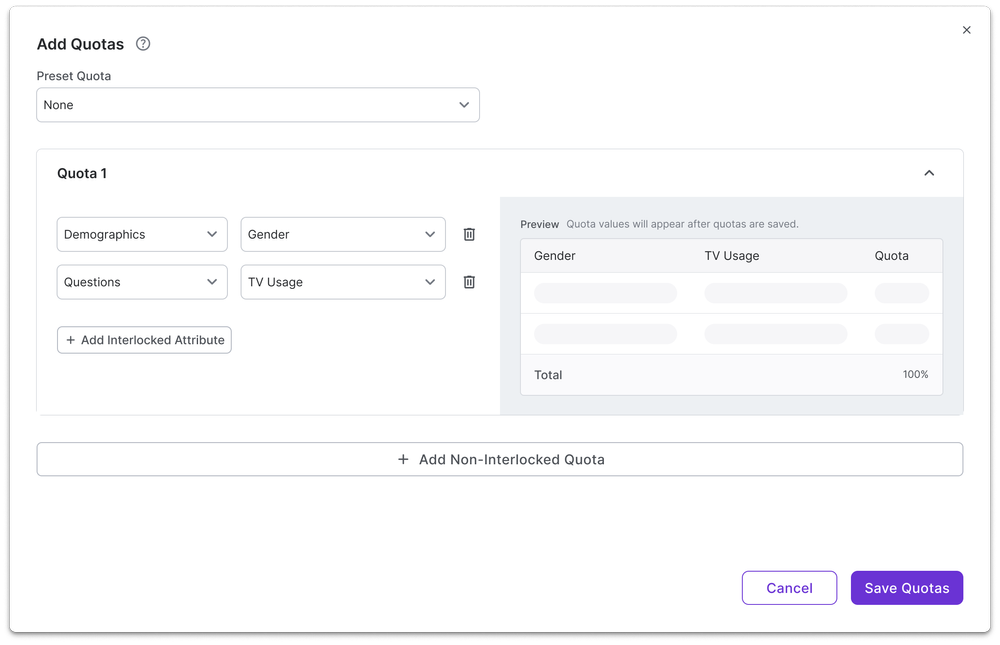

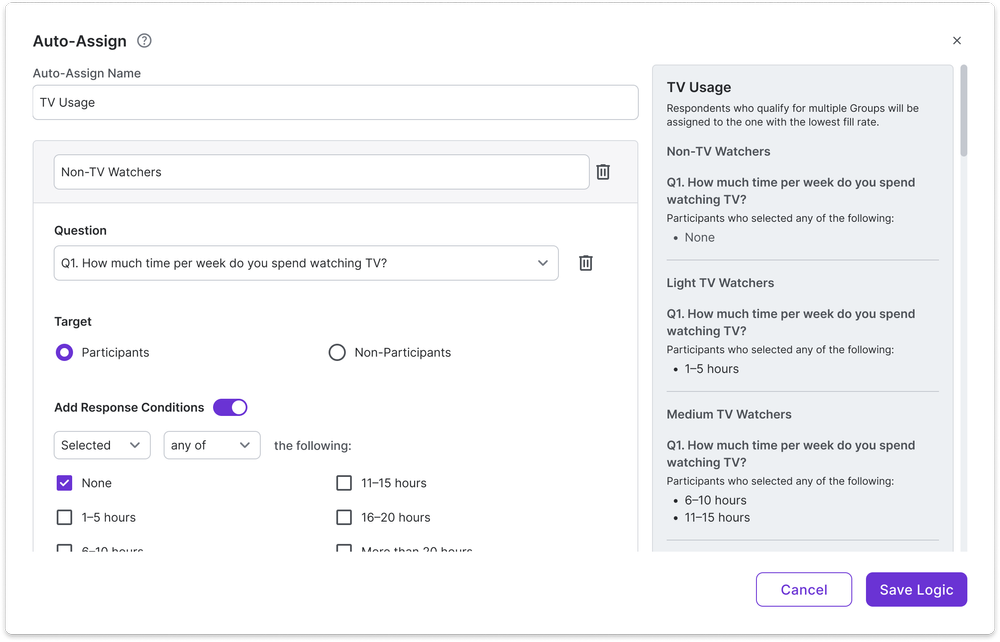

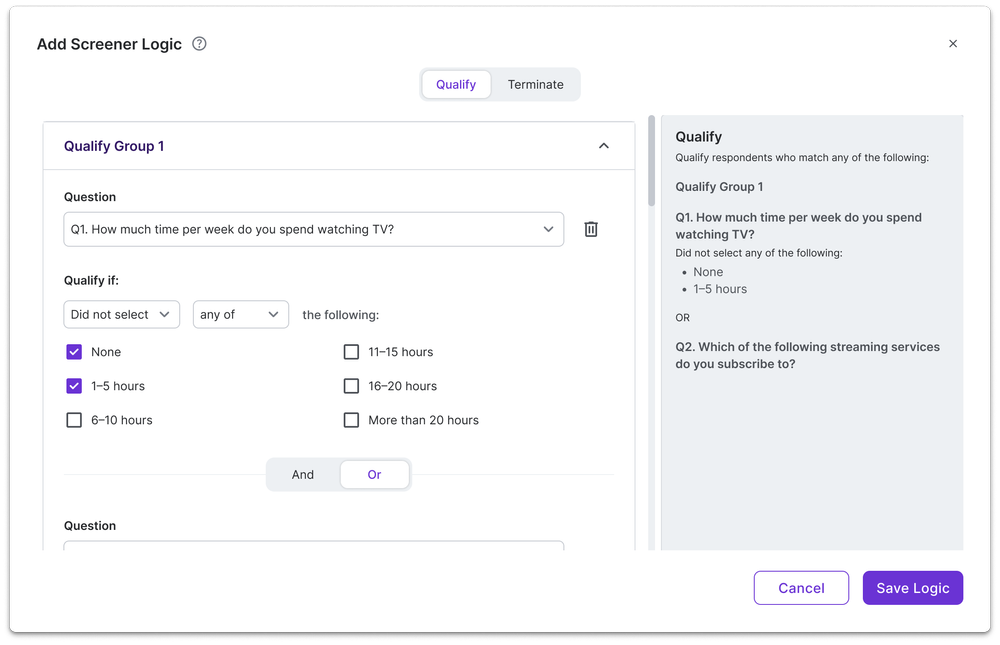

With the strategy set, the design and engineering teams translated the vision into a clearer, more resilient workflow:

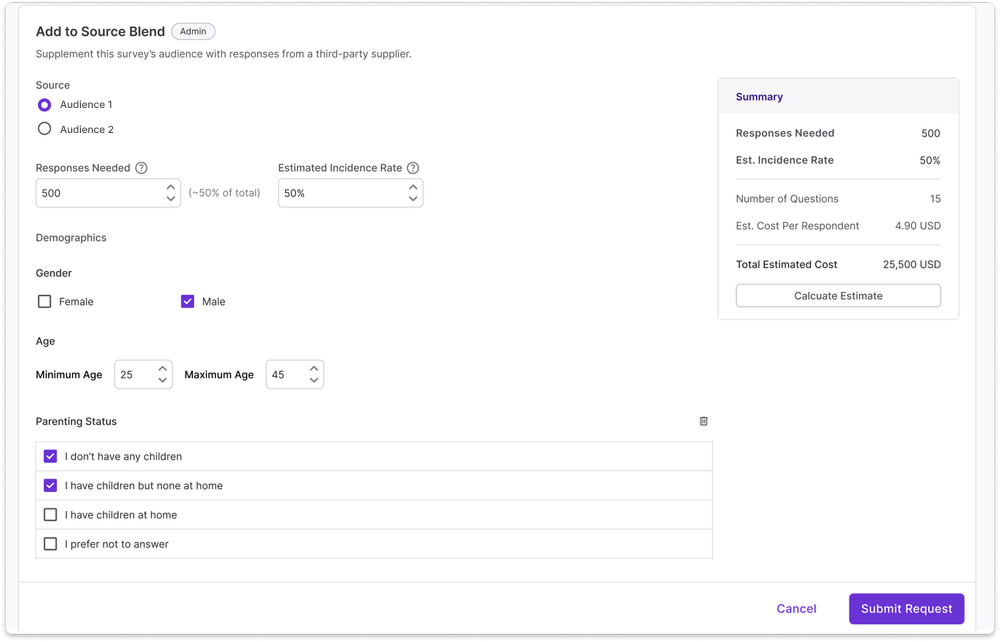

A flexible foundation capable of supporting multiple audience sources through internal tools

Expanded question types in addition to multiple choice questions; text open ends, scale/rank and custom grid

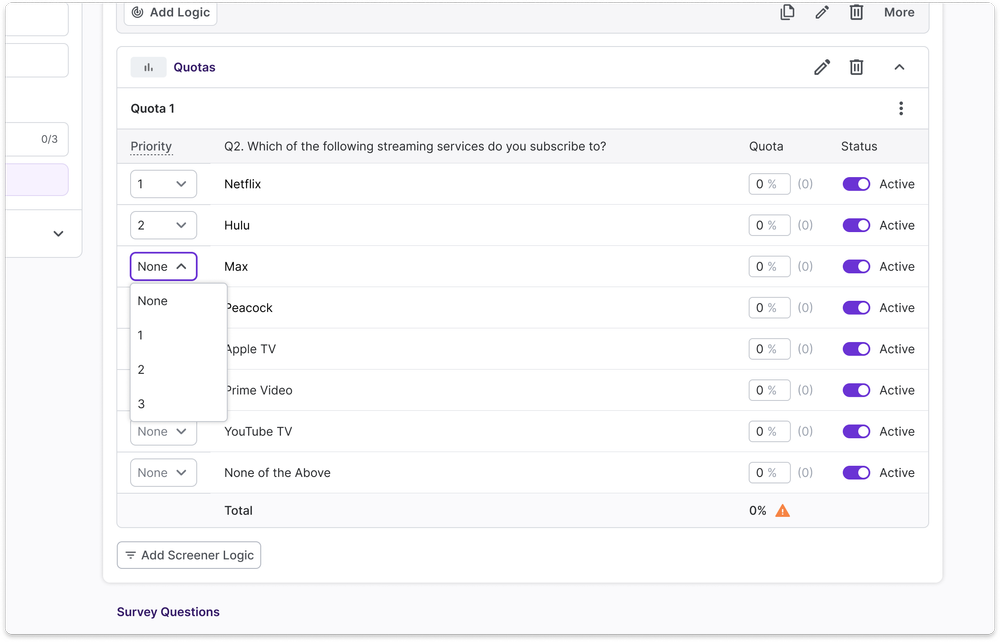

Improved quota setup integrated into the survey experience

Built-in qualification steps directly inside the survey

Streamlined logic controls that simplified complex flows

My role throughout delivery was to keep the work anchored to the system principles — ensuring every solution addressed the root dependency, not just local symptoms.

The outcome

The shift produced meaningful improvements across both short-term performance and long-term scalability.

Short-Term Gains

More predictable study completion

Fewer operational fire drills

A more stable and reliable workflow

Long-Term Impact

Removed the single point of failure

Enabled support for multiple audience sources

Established a future-ready system foundation

This was more than a UX fix — it was a structural redesign of how targeting worked at a platform level.

Reflection

That experience reinforced one of my biggest beliefs as a design leader:

Great UX leadership is systems leadership.

It’s not just about designing screens; it’s about designing the structures that make speed, clarity, and alignment possible across an entire organization.